Do people ask good questions?

Abstract

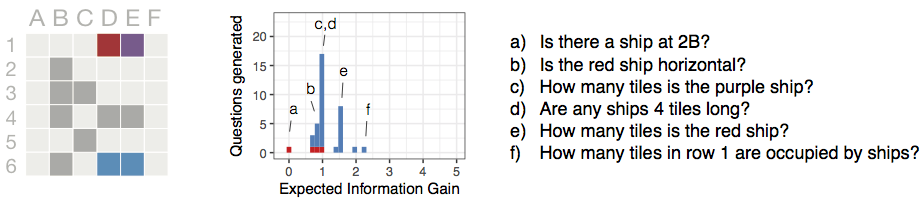

People ask questions in order to efficiently learn about the world. But do people ask good questions? In this work, we designed an intuitive, game-based task that allowed people to ask natural language questions to resolve their uncertainty. Question quality was measured through Bayesian ideal-observer models that considered large spaces of possible game states. During free-form question generation, participants asked a creative variety of useful and goal-directed questions, yet they rarely asked the best questions as identified by the Bayesian ideal-observers (Experiment 1). In subsequent experiments, participants strongly preferred the best questions when evaluating questions that they did not generate themselves (Experiments 2 & 3). On the one hand, our results show that people can accurately evaluate question quality, even when the set of questions is diverse and an ideal-observer analysis has large computational requirements. On the other hand, people have a limited ability to synthesize maximally-informative questions from scratch, suggesting a bottleneck in the question asking process.